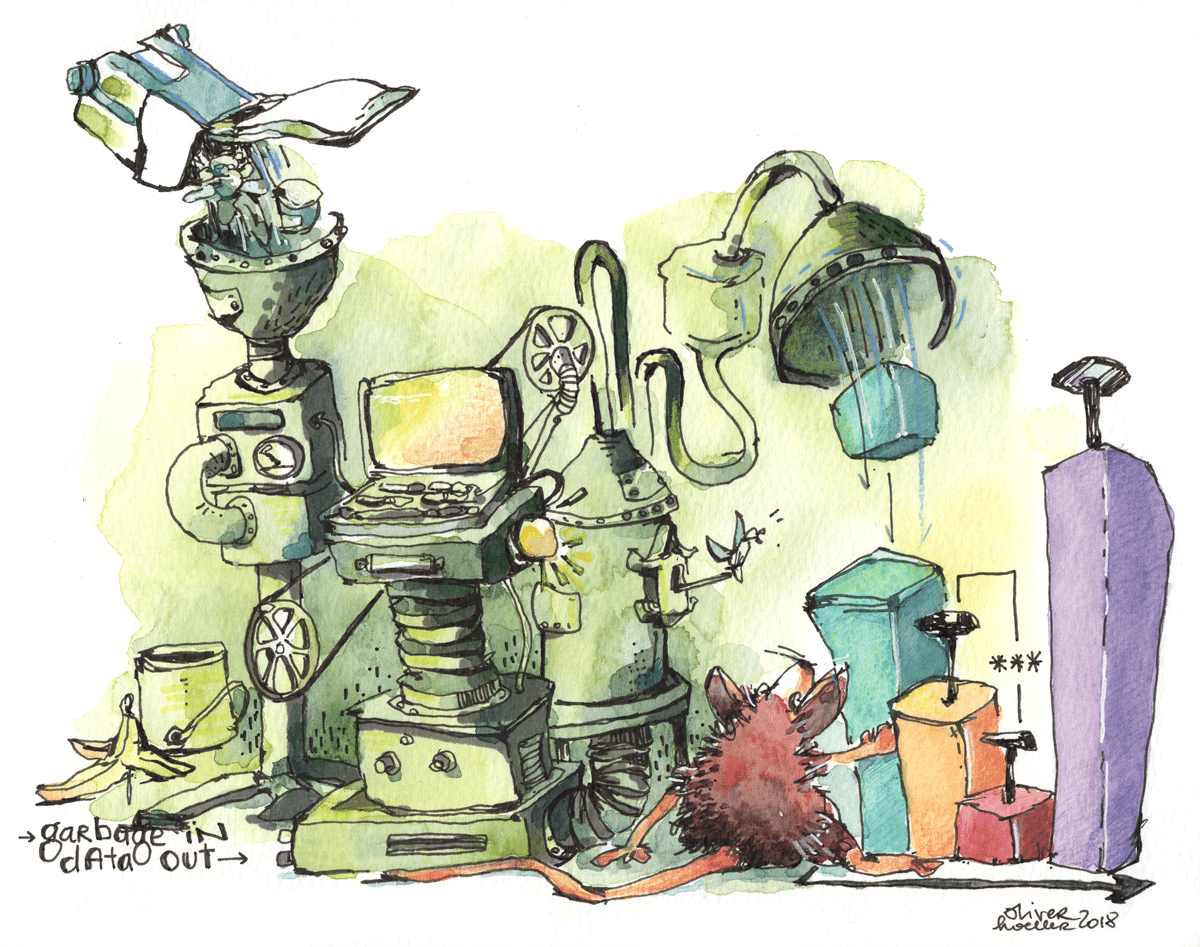

In the article “Enterprise Leaders Should Ask How, Not If, They Should Use AI” a ve ry unspoken aspect of what is required to make artificial intelligence effective is lots of clean data. Daniel Elman of Nucleus Research outlines in his report this need.

ry unspoken aspect of what is required to make artificial intelligence effective is lots of clean data. Daniel Elman of Nucleus Research outlines in his report this need.

The report acknowledges one of the factors holding businesses back from introducing machine learning systems: the massive amounts of data needed to accurately train the system. While this is fine in a research setting where the dataset can be painstakingly curated, labeled, and studied before being fed to the model for training, but in a business environment, data is often incomplete, irregular, or too siloed to efficiently aggregate in the necessary quantities.

When considering whether or not AI and machine learning (ML) should be brought into the enterprise, it cannot be stressed enough that companies looking to implement machine learning capabilities for their business must already have a mature data culture with institutional knowledge and practices for data collection, preparation, storage, and governance, Elman told CMSWire. “Vendors can sell out-of-the-box models trained on general third-party data for simple applications like sentiment detection in email, lead and opportunity scoring, or employee turnover, but for machine learning that is fully optimized for a specific process, years’ worth of historical data is often required,” he said.

So before you can take on any culture changes, predict anything, or extract value out of your data – you need it to be clean, tagged and complete.

So before you can take on any culture changes, predict anything, or extract value out of your data – you need it to be clean, tagged and complete.

InfoDNA Solutions has expertise in this and their product Topla Intensify is designed to help crawl across your enterprise content repositories and bring back a single ‘collection’ that helps identify and delineate the ROT-from-the-real in your document stores. A primary premise that is more relevant than ever – garbage-in-garbage-out.